From Hype to Reality: AI Hype Cycle vs. Failed Pilots

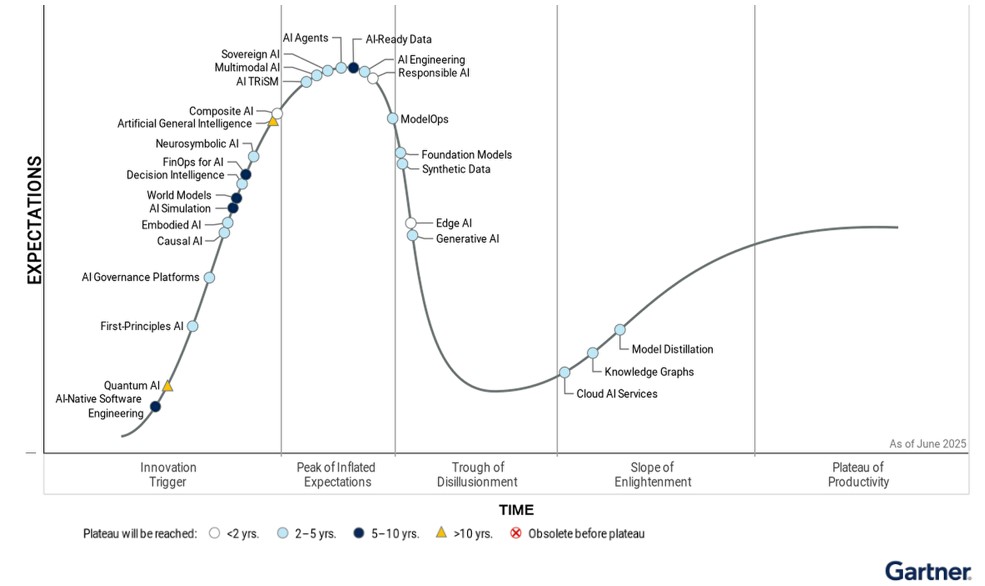

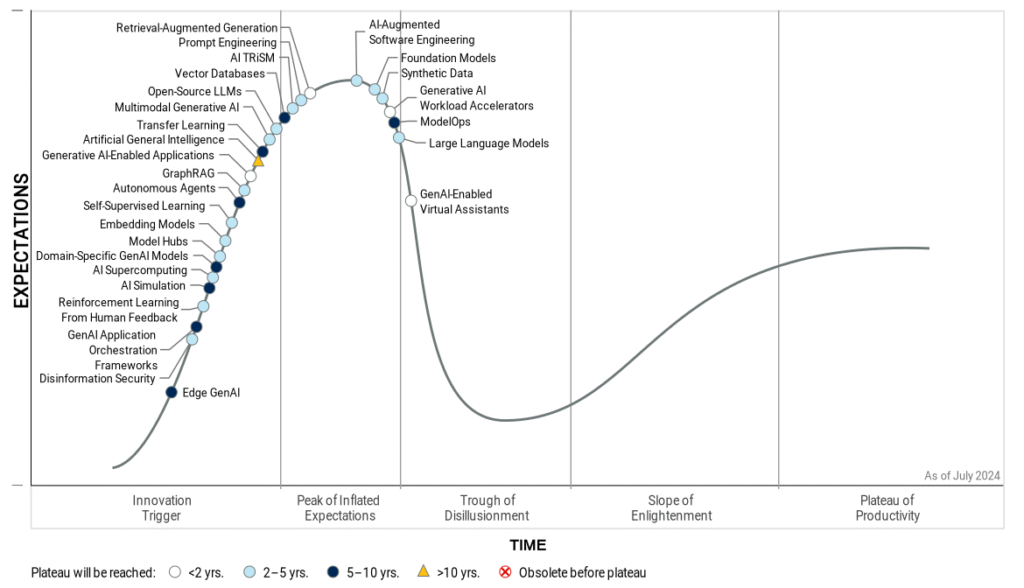

Each year Gartner’s AI Hype Cycle captures the mood of the market, and the comparison between those of 2024 and 2025 is revealing. Last year, the curve was dominated by Generative AI, with copilots and creative tools sitting right at the peak of inflated expectations. This year, the center of gravity has shifted. AI-ready data, AI agents, and governance frameworks like AI TRiSM now hold the spotlight, while generative models occupy a quieter, more pragmatic place on the curve. It is clear that the conversation has moved from fascination with what AI can create to the hard work of building the foundations that make adoption possible.

Reading the AI Hype Cycle: From 2024 to 2025

The Gartner Hype Cycle has been a trusted barometer for where technology stands, and same for AI too. In 2024, the spotlight was on Generative AI, with tools and platforms cluttered on the the “Peak of Inflated Expectations.” This year, the picture looks different. Gen AI still matters, but the focus has shifted toward AI-ready data and AI agents, the foundations that make large-scale AI adoption sustainable.

This shift is telling. Early enthusiasm for flashy applications is giving way to harder, more structural questions. Can organizations trust their data? Are they equipped to deploy AI in ways that scale? Will agents and multimodal models integrate into real workflows without creating new risks? These questions dominate the 2025 cycle.

The comparison between the two years makes the change visible. Last year was about experimentation and imagination. This year is about building the scaffolding needed to deliver impact.

The Shift: From Generative AI to Foundations

Reading through the Hype Cycles, related reports and other industry data provides us some interesting insights. [See a comparison of the two Hype Cycles, and other Insights]

Generative AI reached mass attention rapidly. Copilots, text-to-image tools, and conversational interfaces defined the 2024 hype cycle. They delivered, with rapid improvements, creativity on demand and productivity gains across industries. Yet, the sheer speed of adoption also exposed limits. Many pilots dazzle in demos but struggle in scaled production. To an extent, we seem to be seeing the Digital Transformation (early years) history repeating itself.

The 2025 hype cycle reflects that reality. AI-ready data now takes center stage, a reminder that no model can perform well without reliable inputs. AI agents represent the next frontier, showing how automation might extend beyond isolated tasks (or point solutions) into coordinated systems that can act with autonomy. Governance frameworks such as AI TRiSM highlight another truth: enterprises need trust, transparency, and accountability before they can scale deployments.

Taken together, the shift suggests a level of maturity. The market is asking beyond what AI can create. It is asking what infrastructure, guardrails, and processes must be in place for AI to deliver lasting value.

Why AI Pilots Fail: The Seven Root Causes

Reading the AI Hype Cycle in isolation is not enough. The gestalt emerges when we connect it with industry data, case studies, and the steady stream of news about stalled or abandoned AI programs. Together, these reveal why an estimated 80% of AI pilots never scale into full implementations.

Seven recurring root causes stand out:

- Data fragility – Models falter when the underlying data is incomplete, siloed, or low quality.

- Misaligned expectations – Early hype fuels unrealistic goals, leading to disillusionment when outcomes fall short.

- Integration challenges – Pilots often sit in isolation, unable to mesh with core systems and processes.

- Governance gaps – Without clear policies on measurement, ethics, risk, and compliance, projects stall.

- Skills shortage – Few organizations have the right mix of technical and domain expertise.

- Cultural resistance – Employees view AI as disruptive, not enabling, and adoption falters.

- ROI uncertainty – When benefits don’t like with enterprise goals or are unclear or hard to measure, investment dries up.

These are not isolated issues, but symptoms of a deeper problem. Organizations have raced ahead with pilots without building the capabilities required for scale. The hype cycles tell us where the energy is, but the failure data shows us why enthusiasm often collapses before results arrive.

Why a KPI Framework Matters for AI Success

If the hype cycles show us where attention is moving, and the failure data shows us why projects stall. Then the missing link is clear, organizations lack a way to measure and manage AI initiatives in a disciplined manner. A robust KPI framework for AI implementations provides that anchor.

Such a framework connects the technical with the strategic. It makes it possible to evaluate not only whether a pilot works, but also whether it contributes to enterprise outcomes viz efficiency, growth, risk reduction, or new revenue streams. By measuring readiness, adoption, and impact across the seven root causes of failure, companies can identify weaknesses before they sink investments.

Equally important, a KPI framework turns AI from an experiment into a managed capability. It creates a shared language between technical teams and business leaders. It clarifies how success is defined, how progress is tracked, and when scale is justified. In short, it helps enterprises move from chasing hype to delivering value.

From Hype to Impact: Building the Bridge

The comparison of the 2024 and 2025 AI hype cycles is more than a buzzwords alphabet soup. It holds a a mirror to how the field is maturing, and a reminder of where organizations stumble. GenAI highlighted possibilities, while the current focus on data, governance, and agents shows what is necessary.

Regardless of the industry enthusiasm, the recurring failure of AI pilots highlights a structural problem. Enterprises are not measuring readiness or outcomes in a way that connects innovation with strategy. Without that bridge, excitement fades, and investments stall.

A KPI framework for AI offers a path forward. It helps leaders separate signal from noise, see beyond the cycle of hype, and ensure that every successful pilot, every deployment, and every scaled system is tied to measurable value.

The story of AI, likely will not be pushed by the next spike in the hype cycle. It will be written by organizations that build the discipline to turn experimentation into enterprise impact.