The 98% Lie: Why Scaling AI Pilots Will Fail (And It’s Not the Reason Your Consultants Told You)

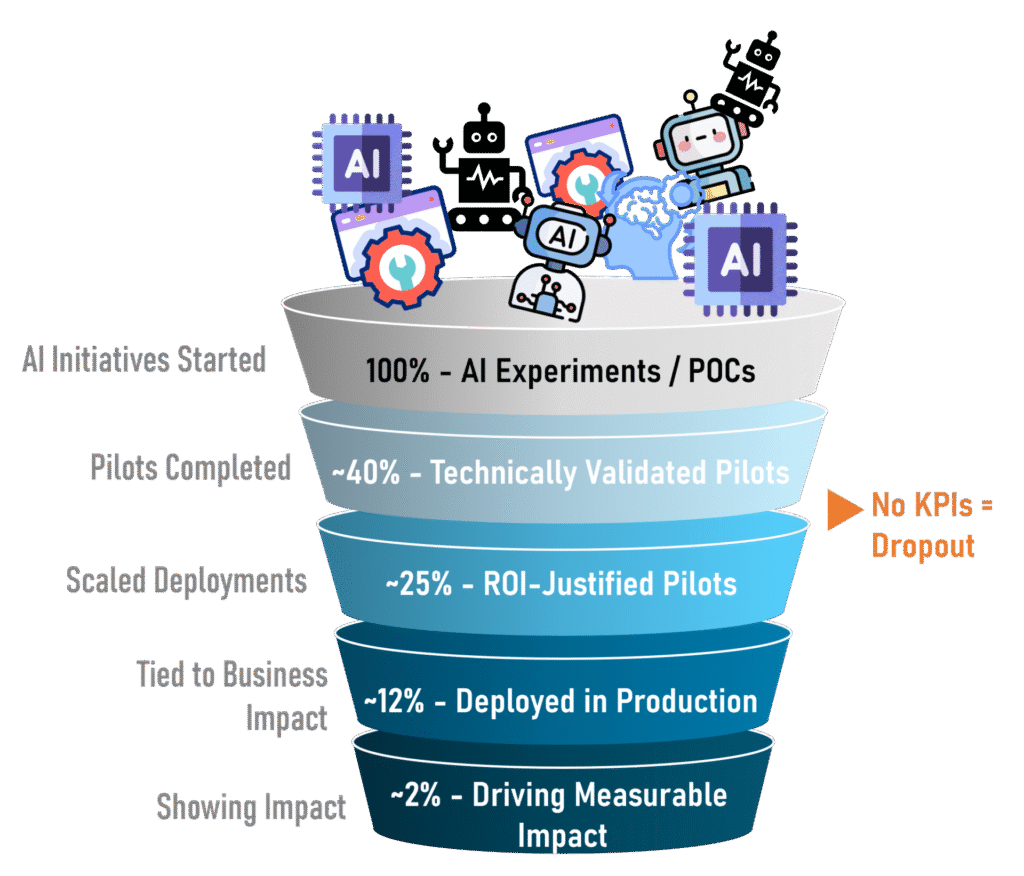

The promise of Artificial Intelligence (AI) is irresistible, we understand. It is fueling multi-million dollar budgets based on confidence alone. But you must face a difficult truth: Your AI ambition is currently on a path to fail. Look closely: AI ambition isn’t becoming Enterprise Impact. The data is stark, and you already know it: Fewer than 2-3% of initiatives, starting with excitement, ultimately drive measurable business impact. But, if you keep considering the problem is just “lack of alignment with business outcomes,” as everyone is touting, you are dangerously wrong. The truth is a far more complex, four-part systemic breakdown that you are not prepared for.

This breakdown, is something that you current AI strategy is oblivious of. This isn’t a technology problem; it’s a readiness crisis.

The Four Fatal Flaws Killing Your Ability to Scale AI Pilots

Most AI pilots fail not because the algorithm was wrong, but because the enterprise infrastructure, governance, metrics, budget, and security, was unprepared for success. These four reasons are stalling the progress of enterprise AI:

1. The KPI Trap: Scoring a Goal Without Measuring the Game

We have been talking about this, and this perhaps is the most commonly known one. Most AI pilots succeed at a technical level: the model reaches 92% accuracy in the sandbox environment. The fatal mistake is stopping the measurement there.

The vast majority of AI initiatives launch without a flexible KPI framework that stretches beyond the MLOps dashboard. If your metrics only measure model performance (e.g., accuracy, precision, recall), but fail to connect directly to enterprise financial KPIs (e.g., even lower cost-to-serve, reduced working capital, faster time-to-market), the project will inevitably and eventually, stall. When the time comes for scaled investment, leaders can’t trace the model’s success back to the bottom line, resulting in an immediate “No ROI = Dropout” decision. You might have proven the tech works, but not that it makes money.

2. The Budget Over-run: Unexpected Data Scope Creep

A POC is inexpensive because it uses a limited scope, is carefully curated, with, often a synthetic dataset. When a pilot attempts to scale from a single point solution, to a process line, the data requirements balloon.

The project now needs access to new data sources, different formats, and complex integration layers that were never accounted for in the initial budget because a comprehensive planned ROI calculation was never done. These hidden integration and data acquisition costs trigger major budget overruns. Momentum dies, and the project is flagged as financially wayward, forcing the plug to get pulled.

3. The CISO’s Veto: The Shadow IT Reckoning

And those projects, often, begin in a grey area in a single BU in that one point solution. This leads to the usage of “shadow IT” data access protocols to move fast. The pilot, itself succeeds, and now requires access to sensitive or regulated enterprise data to scale.

This is the moment the CISO (Chief Information Security Officer) steps in. The effort to move from pilot to production deployment, or the overall process of scaling AI pilots, is stopped by legitimate concerns over data residency, privacy, security governance, and compliance risks that the pilot deliberately sidestepped. You now have a potentially valuable system that your security framework forbids you from scaling, because you had not planned for it earlier.

4. The Hidden Tax: Systemic Poor Data Quality

The easiest roadblock to acknowledge, but often the hardest to fix, is Poor Data Quality. Your pilot dataset was clean; your enterprise data lake is not.

As the pilot attempts to ingest real-world data at scale, it encounters incomplete, inconsistent, or unreliable data blocks. The technical team must shift from building the AI solution to cleaning the data streams. Other IT gets involved in ensuring that data comes in clean, in the future; thus needing to make changes in applications. This is time-consuming, and expensive. The AI model itself becomes a proxy for fixing decades of poor data hygiene, further straining the budget and delaying the time-to-impact until the project loses all internal support.

The Truth of Enterprise Readiness: Why We Can’t Stop Repeating Mistakes

These four types of failures are becoming apparent all over. These are symptoms of a lack of enterprise readiness. Most organisations treat AI as a technology sprint, when it is, in reality, a strategic evolution over time, requiring complex coordination.

We are repeating the mistakes of Digital Transformation (DX). Just as DX initiatives failed when companies focused only on launching a mobile app or moving to the cloud without restructuring processes, retraining people, and redefining KPIs, AI is failing because we are focusing only on the algorithm. We are prioritizing the what (the AI model) over the how (the systemic integration, governance, and measurement). The difficulties in scaling AI pilots are all echoes of the short-sighted, siloed thinking that plagued the DX era.

The reason so many leaders are left running blind is that the full spectrum of necessary change has been structurally ignored. Beyond the four operational flaws mentioned above, genuine transformation requires measuring and mitigating risks across up to ten different areas. These areas, including culture, ethics, change management, skill gaps, and organizational design, must be non-negotiable for sustained scale. Let’s be blunt: If your governance framework only measures MLOps success, your organization is not ready.

Technology isn’t the barrier. The barrier is the lack of a comprehensive, structured framework for alignment, measurement, and enterprise readiness.

3nayan can help you fix this situation, or even prevent it. Give us a shout, and we can chat about this over a coffee.