The Illusion of AI: Why Most AI Implementations Are Skin-Deep and What That Reveals About The Strategy

Over the past year, AI Implementations have become the centerpiece of corporate ambition. Boardrooms are abuzz, analyst calls bristle with AI mentions, and slide decks open with promises of automation and machine-led insight. Just the headlines alone seem to indicate that enterprises have already mastered the shift.

But a closer look, and a different picture emerges.

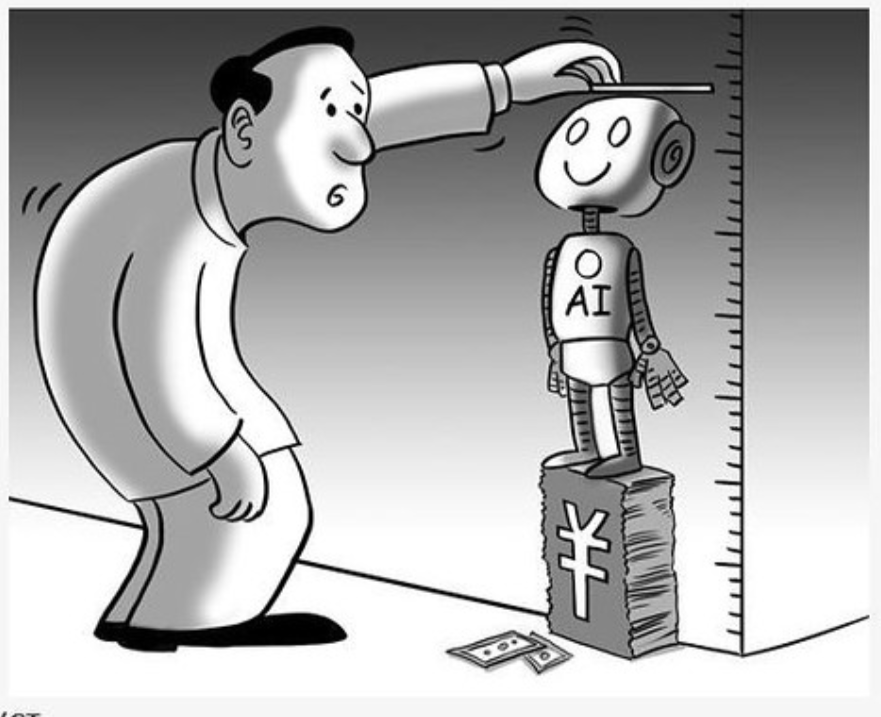

Beneath the momentum, most AI implementations remain superficial — more reactive than strategic. They signal transformation but often lack substance. Too many are driven by market optics rather than customer need, replicating intelligence instead of reimagining business value.

This series pulls back the curtain on AI adoption — not from a tech-first lens, but through the lens of strategy. We begin with a hard truth: AI is being adopted quickly, but not wisely.

The Pressure Cooker: Why AI Implementations are Driven by Survival, Not Strategy

This is not a great time to look vulnerable. Economic uncertainty looms large. Stock markets are skittish. Valuations have softened. And in response, companies are doing what they are often seen doing in times like these—tighten belts, cut costs, and try to sound future-ready.

Enter artificial intelligence.

AI has become the language of efficiency, of transformation, of hope. Saying you are investing in AI signals resilience. Saying you are replacing roles with AI, though controversial, indicates action. From a market optics standpoint, it is a fantastic narrative. The problem is, that’s exactly what it often remains: a narrative.

Behind the scenes, many companies are quietly, or publicly—trimming headcount. Entire departments are being restructured or dissolved. In their place, tools are being introduced: chatbots (with limited knowledge or capability), auto-generators, workflow automatons. These moves are framed as innovation, but more often than not, they are born out of short-term pressure to perform. In reality, the AI implementation happening in many organisations is Not about Transformation. It’s about survival.

This might explain why so many of these initiatives stall after a few months, why pilots remain pilots, and why customer experience often worsens rather than improves. Reminds one of the early years of Digital Transformation, doesn’t it?

There may be a deeper issue at play. In many cases, AI isn’t being deployed to build long-term advantage, but to deflect short-term scrutiny. With CEO tenures averaging around three years, leaders face pressure from investors, boards, and internal teams to signal bold action. And right now, nothing signals bigger than AI. The irony is that most stakeholders remain insouciant to what’s happening beneath the narrative.

Doing AI and doing it well are two very different things.

In Fashion, Out of Depth: The Style-Over-Substance Approach to AI

There is a peculiar characteristic to the current wave of AI adoption — one that feels more like theatre than transformation.

Much of what we see today is not really being done in pursuit of competitive advantage or customer experience. It is being done because AI, quite simply, is in fashion. Again, the published narrative might be different. And in the corridors of corporate ambition, being fashionable is often synonymous to being prescient and forward-looking.

From boardroom decks to startups to product launches, AI implementation is now the default bullet point. It features prominently in press releases, annual reports, keynote addresses. But scratch the surface, and what often emerges is a scatter of hastily assembled pilots, vendor-led showcases, and GenAI tools applied with the elegance of a sticky plaster — where a deep business problem might require surgical reinvention.

What’s often missing is a sense of intent. And more crucially, a sense of understanding.

Missing Intent and Purpose

The casual, context-ignorant way generative models are being force fitted into workflows is concerning. Many of these processes weren’t built for AI in the first place. Today, legal teams use it to summarise contracts without understanding what the model knows. Marketing churns out content in minutes, often ignoring tone, accuracy, or brand. Operations build around chatbot outputs—only to realise too late that the bot doesn’t grasp process or customer needs.

AI Implementations, in their current form, are a magic wand or a silver bullet — expected to “think,” “understand”, “solve,” or “automate” without context. GenAI, in particular, has been adopted with child like enthusiasm for candy. But these systems are not sentient, nor strategic. These AIs remix, not think. They operate on large blobs of existing data (often incoherent), producing content that sounds convincing though built on probability, rather than insight. And yet, they are now being entrusted with decisions that require nuance, judgment, and — ironically — intelligence.

…and then there is the customer

It’s the same pattern we saw during the early phases of digital transformation: technology leads the conversation, while purpose has long exited the room, exasperated, for a bar.

And when purpose exits, it takes along the customer. Because at the heart of most of these implementations, one thing is conspicuously absent — the end user. These AI implementations are rarely driven by what the customer needs. They are shaped, instead, by internal urgency, brand optics, and a breathless desire not to be left behind.

There is no denying the pressure. But when the pressure is to appear transformed, rather than to be transformed, what we get is a performance — not a pivot.

The Data Mirage: How Poor Foundations Undermine AI Implementations

We don’t talk enough about the bedrock of AI — data. Because if we did, we would be forced to admit an uncomfortable truth: most organisations do not really understand their own data. And that, they are building their AI ambitions on shifting sands.

Regardless of all the chatter about data-driven decisions and AI-powered insights, the data itself is often inconsistent, incomplete, outdated, or simply incoherent. There is no real framework for how it is collected, cleaned, updated, or governed. No overarching sense of what is reliable, and what is just…there.

But that hasn’t stopped organisations from rushing ahead, headlong.

AI implementations are often launched with immense excitement — and a surprisingly vague understanding of the data that fuels them. Tools are plugged in, pilots launched, and dashboards redesigned. But, the foundation remains fragmented, and the inputs poor. And no amount of AI glitter can disguise that.

It’s a bit like trying to write a book with a dystopian dictionary of misspelled words. Impressive, till you start reading it.

Data, in Theory. Dysfunction, in Practice.

One of the most ironic truths, we keep discovering in real client scenarios, about modern AI implementation is that it is deeply dependent on data integrity — and yet data is rarely treated with the strategic seriousness it deserves. Across functions, data is still a tactical concern, if at all. At best, it is an afterthought in a CRM (or CMP) entry or thhe forgotten field in an ERP update. It could be the inconsistent tag in a CMS or even the multiple different spellings for a geographical area, under a pin code.

Without fundamental data discipline, the AI that sits atop this hodgepodge just adds another layer of confusion — remixing flawed inputs into even more elaborate flawed outputs.

However, we speak of these tools as if they were visionary. We fail to appreciate that feeding them 12 years of unstructured meeting notes will not yield a business model. And that customer sentiment cannot be understood from a pile of half-tagged contextless feedback forms.

This is not intelligence. This is guesswork, with a sprinkling of code glitter.

Until organisations are willing to invest in the ‘unsexy’ work of data strategy — consistency, accuracy, structure — their AI dreams will remain just that. Dreams.

The Illusion of Intelligence: Why Generative AI Isn’t What You Think It Is

It is easy to forget, in the glow of headlines and funding rounds, that Generative AI is not intelligent. At least, not in any way we traditionally understand the term.

AI does not think or reason. It does not know what you meant, or might be thinking — only what has statistically followed similar phrases in the past. And yet, we are now entrusting it with tasks that demand nuance, judgment, and domain fluency. We also seem to believe, larger the model, better the result.

There is an amount of faith we seem to be placing in these systems. A willingness to believe that, because a GenAI tool can produce content that looks coherent, it must also be correct, meaningful and strategic. This illusion has consequences.

In 2023, 73% of enterprise leaders reported piloting GenAI tools (McKinsey), but fewer than 24% had integrated them into core business processes. Even fewer had performance metrics tied to actual outcomes. The gap between experimentation and impact is massive — and growing.

Back in the real world, GenAI is being force-fitted into workflows it was never designed for. Drafting contracts, triaging customer issues, synthesizing meeting notes, rewriting old reports. All this with a belief based optimism that the tool “probably” is right.

We often overlook that these models are not drawing insight from data. They are simply arranging words based on patterns in previous content, and following GIGO. If the source material is poor, outdated, or biased — so is the output.

Worse, the authority with which these tools regurgitate the data porridge, often conceals the fact that they do not understand. There is but a simulation of comprehension, but none in reality.

And yet, organisations treat this simulation as substance, and an equivalent of a human analyst or consultant. In reality, they are using a confident and articulate tool, though unthinking. Leaderships need to realise that until we start asking harder questions — about provenance, accuracy, andcontext — the outputs will continue to impress in form but falter in function.

This isn’t to say GenAI doesn’t have value. But, the value lies not in assuming it will think for us, but in learning how to think better around it.

Levels of AI Implementations

A strategic maturity model for Generative AI implementation

| Level | What It Looks Like |

|---|---|

| Level 1: Theatre | Buzzwords in decks, no real data hygiene, experimental tools led by vendor demos. |

| Level 2: Cosmetic | A few working pilots, some marketing use cases, poor governance or data discipline. |

| Level 3: Functional | Defined scope of use, data pipelines in place, early-stage governance emerging. |

| Level 4: Strategic | AI aligned with customer needs and business goals. Strong data strategy. Measurable ROI. |

Why AI Implementations Fail Without Culture and Customer Focus

A 2023 BCG study found that 70% of digital and AI transformations fall short of their goals, often due to internal resistance and weak user alignment, thus pointing to behavioural issues.

Across transformations, two things are consistently overlooked: the customer, and the culture. Their absence is striking, considering how central they are to making AI perform. Yet, surveys continue to show that organisations continue deploying AI at scale without addressing either.

The Customer Gets Sidelined

AI projects promise better customer experiences — smarter recommendations, seamless support — but in reality, they are often scoped around optics and cost reduction. According to Deloitte (2024), nearly 60% of enterprise AI projects are internally focused, launched with minimal customer insight and no serious application of design thinking. This results in automation without empathy, dashboards that inform but don’t engage or even contextless chatbots that articulate, but don’t connect.

These systems often feel cold and transactional — technically correct, but strategically hollow and internally focused.

Culture: The Quiet Failure

AI transformation shouldn’t be just about tools — but about how people engage with them. This engagement is often thin because culture gets overlooked.

In a 2024 PwC survey, only 23% of employees in AI-active companies felt “very confident” about how AI affects their work. Fewer than 20% had a voice in shaping implementation. This indicates a top-down mandate, rather than a transformation. And when culture lags, capability does too.

The Real Risk is Trust

What erodes in these situations isn’t the tech, but trust. Customers end up navigating broken journeys. Employees feel replaced, not reskilled. Leaders discover that the deck doesn’t match the ground reality. And eventually, analysts figure this out too.

Unless organisations shift from a narrow focus on what AI can do to who it’s for and what values guide its use, most initiatives will stall. We’ve seen this before — in the early days of digital transformation. We’ll see it again, unless culture and customer focus become core, not afterthoughts.

A New Mandate for Leadership

For all the capital being poured into AI, one question remains under-asked: What kind of organisations will we end up building, or evolving into? This is not about resisting AI. It’s about rethinking what it means to lead through it.

In many enterprises, AI adoption is no longer a choice. It is a condition of perceived relevance or even longer-term existence. But relevance can’t be built through procurement. It is shaped by purpose. And increasingly, the kind of AI decisions leadership makes today are a mirror to how the organisation sees itself — transactional or transformational, performative or purposeful. We might find a good set of such examples coming up from the Indian IT services industry, in the next few quarters.

From Buying Tools to Building Capability

AI is not a plug and play product, but a built capability. This capability cannot be bought off-the-shelf from vendors promising instant intelligence. It requires leadership clarity about what problems are worth solving, and determining what success looks like — not just in efficiency terms, but in terms of customer trust, employee enablement, and organisational adaptability.

According to MIT Sloan’s 2024 research on AI-ready organisations, the highest performing companies invested 3x more in change management and capability-building than they did in tooling. They focused not just on deploying AI, but on preparing people, restructuring workflows, and rethinking metrics.

The Leadership Gap

What AI implementations, and conversations reveal — quite uncomfortably — is a widening leadership gap. Not everyone in leadership positions is equipped to lead in this new paradigm. Many are delegating decisions (check tables at the end of this article) down to tech vendors or AI “taskforces” without internal alignment, strategic clarity, or enough fluency to ask the right questions. As mentioned earlier, this is a repeat of Digital Transformation blunders happening.

AI-native world is ambiguos. Leadership needs to lean towards a bias for evidence, and not a blind faith in dashboards. It needs fluency in ethics, context, and tradeoffs — not just enthusiasm for models and metrics. And it asks of leaders a kind of humility: the willingness to say, “We’re not ready,” and to invest in capablity to become so.

Closing Arc: Beyond the Curve — Toward Strategic Maturity in AI

AI is not just a trend. It is an inevitability which tests organisations on deeply they understand themselves, their data, and their customers. And also if the leadership can discern enough to deliver the substance of transformation. This, rather than knee jerk reactions, can drive the next decade of enterprise innovation.

The promise of AI is not in the model. It is in the model of leadership it demands.

Organisations that will thrive, we believe, are not necessarily first adopters but wise adopters – who are guided by clarity, governed by values, and measured not just by KPIs, but by trust. We are in an era where strategy is not about what you implement, but why, for whom, and to what end.

The companies that understand this — and act accordingly — will shape not just the future of AI, but the future of business itself.

| GenAI Actually Is | But It’s Often Treated As |

|---|---|

| A pattern-recognition tool | A creative strategist |

| A remixer of existing data | An original thinker |

| Non-sentient, without understanding | A decision-maker |

| Useful for speed and structure | Capable of business insight |

| Context-blind and probability-driven | Context-rich and judgment-led |

| Stakeholder | What They Actually Want | What AI Projects Often Deliver |

|---|---|---|

| Customers | Seamless, contextual, humanised experiences | Transactional automations with poor design |

| Employees | Tools that empower decisions, not replace them | Interfaces that confuse or displace |

| Leaders | Sustainable competitiveness, risk-aware growth | Tactical wins dressed as strategic leaps |

| Boards/Investors | Clear ROI, long-term value, ethical assurance | Vague metrics, experimental tools, flashy demos |